This article was originally written by Joao Tapadinhas, Chief Architect of Dyna.Ai. Read the original post here: https://www.linkedin.com/pulse/agentic-ai-revolution-why-starting-today-beats-joao-tapadinhas-k0dkf/?trackingId=ReRqqg2pT9iRkyYUbOAs8w%3D%3D

Agentic AI is evolving rapidly, and while these systems are not yet flawless, early adoption with proper risk controls ensures businesses stay ahead and maximize long-term gains.

Summary

Agentic AI is evolving at an exponential rate, with each new generation of models achieving breakthroughs that were recently thought unfeasible. While these agents and the underlying models are not yet flawless, the clear trend is that they will continue to improve rapidly. Organizations that start piloting agentic AI today can take advantage of incremental value while preparing for even more sophisticated capabilities tomorrow. By targeting task-level automation, maintaining strong human oversight, and implementing robust risk controls, businesses can capture transformative gains without assuming undue risk. Those that move early will not only gain compounding benefits but also develop the agility needed to integrate future advancements seamlessly—while those who hesitate may find themselves struggling to catch up in an AI-driven world.

Key Insights

- AI models now solve complex problems with dramatically higher accuracy than even a few months ago. Enterprises that base decisions solely on last year’s performance risk underestimating AI’s near-term potential.

- Delaying adoption means forfeiting valuable interim learnings and cost savings. Over time, early adopters accumulate process optimizations, skillsets, and data advantages that become difficult to match later.

- AI currently excels at automating discrete, repeatable portions of jobs, not entire roles. Even partial automation—30% to 50% of high-volume tasks—can yield significant gains in efficiency and productivity.

- Techniques like phased rollouts, continuous monitoring, decision safeguards, and human-in-the-loop reviews can prevent AI errors from causing reputational or regulatory harm, enabling safe and responsible scaling.

Recommendations

- Initiate agentic AI projects now so you can seamlessly upgrade to more advanced models during implementation. This future-proofs investments and accelerates competitive differentiation.

- Start with lower-risk use cases (e.g., basic support tickets) before moving into high-stakes areas. This approach builds internal buy-in, allows for process improvements, and identifies potential failure modes early.

- Position agents as digital “co-workers” or assistants, automating select tasks while humans handle complex decisions. This balances cost savings with maintaining the human judgment necessary for quality and innovation.

- Use automated alerts, human reviews, and robust logging to catch anomalies or “hallucinations” swiftly. Establish governance boards or committees to ensure ongoing alignment with business objectives and regulatory standards.

Introduction

While AI investment continues to surge, adoption remains a challenge—55% of organizations report using AI in at least one business unit, yet many struggle to scale projects beyond initial pilots. (Stanford AI Index) This stark reality captures the paradox AI leaders face: everyone sees the potential of AI, but few have realized it at scale. Now, a new wave of agentic AI – autonomous agents powered by advanced reasoning models – promises to break that stalemate. These AI agents can think through complex problems and act on our behalf. But should enterprises jump in now, given that today’s agents still make mistakes? The evidence says yes. AI capabilities are improving at an unprecedented pace. In just the last year, OpenAI’s latest reasoning AI model leaped from solving 13% of advanced math problems to 83%, far surpassing its predecessor. And OpenAI’s next-gen o3 model nearly tripled o1’s performance on general reasoning tests just months later – progress that would have seemed impossible a year prior.

Such rapid evolution is more than a technology story; it’s a strategic business inflection point. Forward-looking companies are realizing that by the time a well-planned AI initiative goes live, the underlying tech will be far more capable. In other words, the AI you deploy in 18 months won’t be the AI you start building with today – it’ll be better. Potentially, orders of magnitude better. This creates a compelling case to start investing in AI agents now. Enterprises that act today can ride the improvement curve, while those that wait for “perfect” AI may find they’ve waited too long and fallen behind.

In this article, we will explore the rapid evolution of AI agents and explain why being 'imperfect' doesn’t mean being 'ineffective.' We’ll build the business case for adopting agentic AI early, and outline how to do so responsibly – through phased deployments that manage risk and build value step by step. By the end, one message should be clear: when it comes to deploying AI agents in the enterprise, waiting is the riskiest move of all.

The Acceleration of AI Agents

The pace of AI agent advancement is dizzying. Over the past 12–18 months, AI researchers have cracked problems that stumped previous generations of models. Major providers are in an arms race to improve reasoning and autonomy in AI: OpenAI’s new “o-series” models (optimized for step-by-step reasoning) are a prime example. The flagship o1 model, released late 2024, spent more time “thinking” and managed to solve complex tasks at success rates previously unheard of. Its successor OpenAI o3 (debuted just a few months later) then delivered another quantum leap – scoring 87.5% vs 32% for its predecessor on a general problem-solving test, and attaining an International Grandmaster level in coding challenges. In plain terms, an AI agent’s skill at complex reasoning that was mediocre last summer became world-class by this spring. Such exponential improvement is redefining what AI agents can do.

And it’s not just OpenAI. DeepMind (Google) launched Gemini 2.0 “Flash”, incorporating a similar multi-step “thinking” process to boost reasoning. An experimental variant even breaks problems into sub-steps so the AI can reflect before answering – a technique that Google openly acknowledged was pioneered by OpenAI’s o1. Meanwhile, an open-source player, DeepSeek, released DeepSeek-R1 – a reasoning-optimized agent model that managed to match OpenAI’s o1 performance on key benchmarks. This was a watershed moment: cutting-edge AI agent capabilities are not confined to tech giants; they’re diffusing rapidly through the industry.

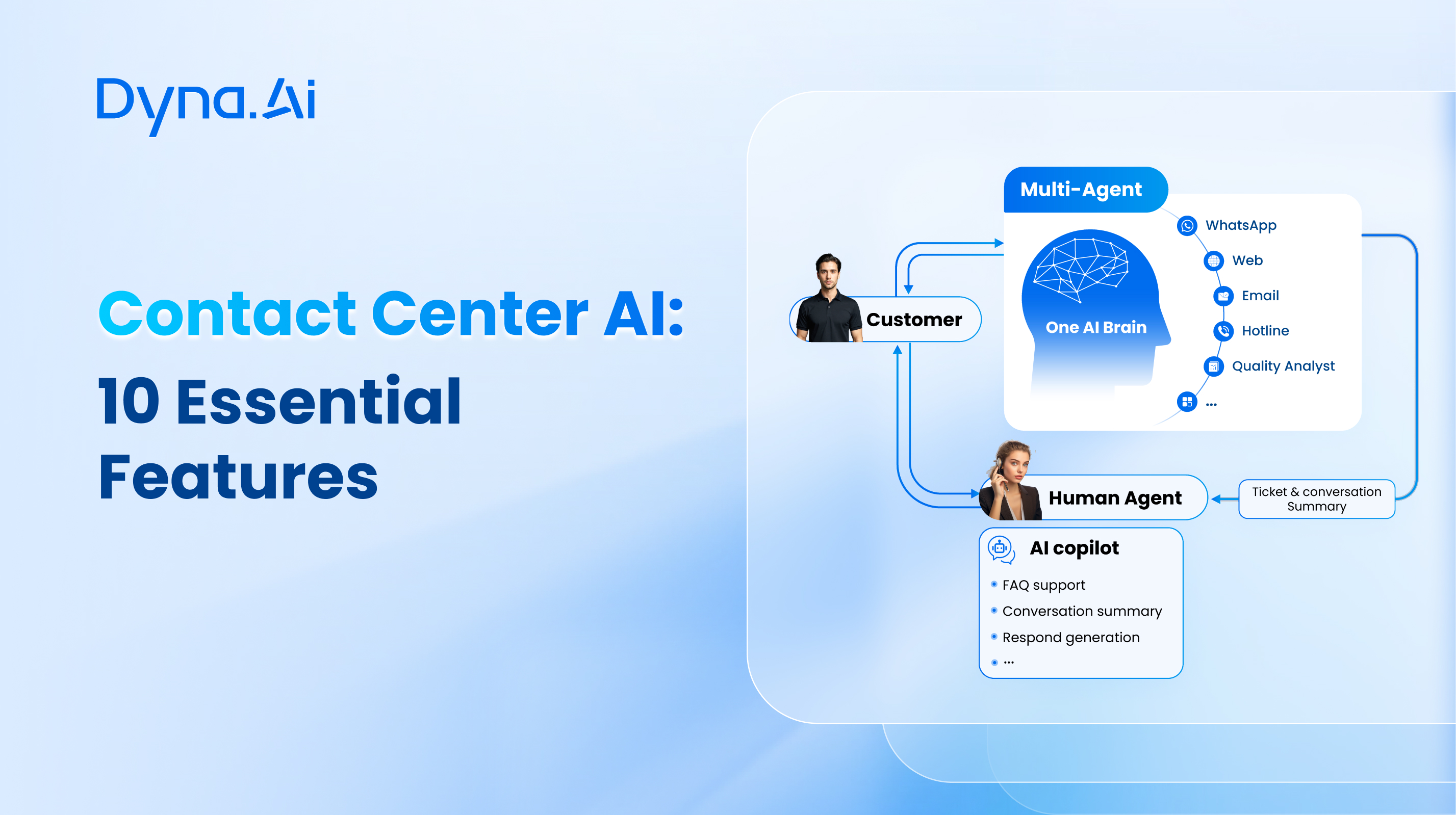

Crucially for enterprises, these advancements directly benefit agentic AI applications. Better reasoning means AI agents can handle more complex decision chains without human hand-holding – whether it’s troubleshooting IT requests, approving an insurance claim, or managing supply chain exceptions. We’re effectively witnessing the birth of AI “co-workers” that can autonomously carry out tasks that used to require significant human judgment. The trendlines indicate that each new generation of AI model brings us closer to agents that are more reliable, context-aware, and able to operate with minimal oversight. Moreover, the line between an AI model and an AI agent is blurring; by 2025 many models will have built-in tools and decision-making, essentially functioning as digital workers.

For AI leaders, the takeaway is that the capabilities of agentic AI are advancing far faster than typical enterprise tech cycles. What an AI agent couldn’t do well in 2024 might be done effortlessly in 2025. In previous technology shifts, business and technology leaders rarely had to assess and deploy solutions this quickly—and certainly not at the exponential speed of AI's evolution. Given this acceleration, evaluating AI agents based only on last year’s abilities will underestimate what they’ll deliver by the time you implement them. In short, we must assess agentic AI with an extrapolated view of its evolution. The next sections will build on this, discussing why acting early is prudent and how to leverage these fast-improving agents in a pragmatic way.

The Business Case for Investing Now

Why invest in AI agents now, when they still make mistakes? Because in business, timing is often the difference between market leaders and laggards. Waiting for AI to be “perfect” is a losing proposition – both because of the opportunity cost of delay and the compounding advantages of early adoption.

First, consider the time-to-deployment for enterprise AI projects. Rolling out any significant AI solution in a large company isn’t an overnight task – it can take months just to approve budgets and form teams, and a year or more to pilot and integrate into production. By the time your agentic AI system is fully deployed, today’s bleeding-edge AI will have become far more mature and capable. For example, if an organization had kicked off an AI agent initiative in early 2024, they might have started with GPT-4-level tech; by early 2025 they could upgrade to OpenAI’s o1 models during implementation and launch with far superior capabilities without having to restart the project. Waiting would have only meant forfeiting those interim gains. In essence, initiating an AI project now is a way of future-proofing – you position your enterprise to capitalize on each wave of improvement as it comes.

Second, there’s a growing competitive gap between early adopters and those holding back. Companies that experiment early don’t just get access to better tech down the road; they learn how to use it, adjust their processes, and build institutional knowledge. These are intangible assets that late adopters struggle to catch up on. Early movers also attract AI talent and set data infrastructure in place sooner. The payoff is tangible: early AI adopters are already outperforming peers. A recent analysis showed that companies adopting AI at scale with proactive strategies report profit margins that are three to 15 percentage points higher than the industry average. (McKinsey on AI ROI). Late adopters actually risk declines as they fall behind the efficiency curve. This illustrates a compounding effect: small automation wins today can snowball into major financial impact over time – but only if you start accumulating those wins.

Third, the cost of inaction is rising. Every quarter that passes, more of your competitors are infusing AI into their operations. Nearly 90% of Fortune 1000 firms are increasing AI investments and over 85% of executives believe AI is critical to staying ahead. If you choose to “wait and see,” you may be inadvertently ceding ground. By the time AI agents are foolproof (if that day ever comes), the business processes, market expectations, and even workforce skills around you will have evolved, favoring those who integrated AI early. History has shown with technologies like cloud computing or mobile apps: the longer one waits, the harder it is to catch up once the tech becomes standard.

That said, investing now doesn’t mean gambling the company and your career on unproven tech. It’s about starting small but strategic. The business case for AI agents should be built on incremental value and learning – not a big bang. You should identify use cases where even a partial automation by an AI agent can save significant time or money, frame it as a pilot with controlled scope, and highlight how those savings will compound when scaled. By presenting a clear ROI story (e.g. “If an agent handles 30% of Level-1 support tickets, we save $X million annually”), AI leaders can justify the investment today, while pointing to the trajectory of technology improvements as a bonus that will make those numbers even better by launch.

The key message: Don’t evaluate agentic AI only as it exists in this moment – factor in where it’s headed. Savvy AI leaders invest in what will be, not just what is. Jumping in early on AI agents – with a smart plan – is the best way to secure tomorrow’s advantages and avoid playing catch-up later.

Scalable Automation, Not Role Replacement

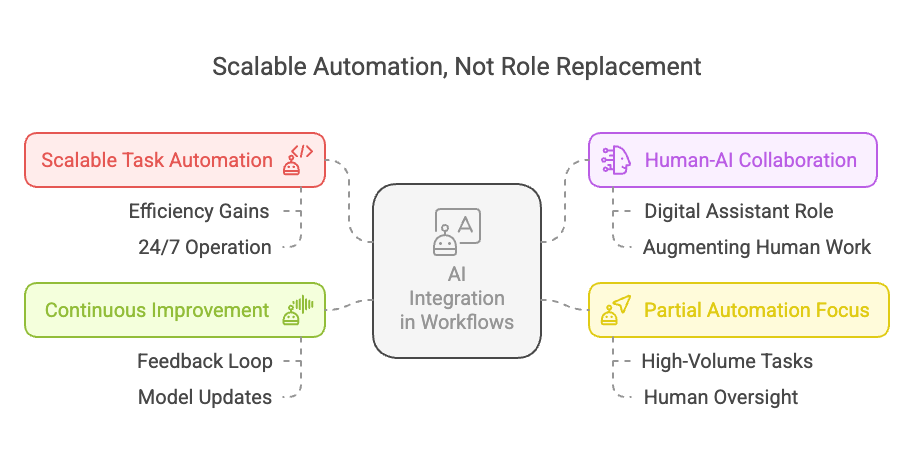

Amid excitement about AI agents, leaders must keep expectations grounded: for the foreseeable future, even the best AI will have failure rates in certain tasks. Follow a pragmatic adoption mindset: aim for scalable task automation, not outright job elimination. The distinction is key to gaining support and avoiding disappointment.

AI excels at tasks, not entire jobs. Research shows that while only ~5% of occupations can be fully automated end-to-end, the majority of jobs have 20–50% of activities that can be automated with current technology. That means most employees will have some tasks that an AI agent could handle much faster or at greater scale, and other tasks that still require human judgment, creativity, or empathy. AI leaders should identify those specific tasks or process segments that are ripe for automation. For example, an agent might handle data gathering and initial analysis in an analytics team, but the final interpretation and strategy recommendations come from humans. By carving out pieces of workflows that are highly automatable, companies can scale up efficiency (because the agent can run 24/7, handle volume spikes, etc.) without pretending the AI can do everything.

Design for human-AI collaboration. Rather than viewing the AI agent as a replacement for a person, position it as a digital assistant or copilot for your teams. This framing is powerful for two reasons: it sets realistic boundaries on the AI’s role, and it reassures your workforce that the goal is to augment their work, not make them obsolete. For instance, in a customer support department, you might deploy an AI agent to draft responses or resolve simple inquiries, while human agents focus on complex or sensitive cases. The human staff now have more time and energy to devote to higher-value interactions, guided by the AI’s legwork on simpler tasks. Over time, this can dramatically improve productivity and service quality – one agent might effectively do the work of many, but under human guidance for the tough calls. Such a hybrid model plays to the strengths of both: AI’s speed/scale and humans’ judgment/creativity.

Aiming for “90% automation” instead of 100%. In engineering terms, fully autonomous systems often hit a wall of diminishing returns (the last few percent of performance are the hardest). The same applies to AI in business processes. Trying to automate 100% of a role will incur exponentially more effort (and risk) than targeting partial automation. And it may not even be necessary – automating even 30-50% of high-volume tasks can yield huge cost and time savings. By planning for a human fail-safe or oversight at the edge of the AI’s abilities, you avoid pushing the technology beyond its reliable limits. For example, let the AI handle invoice processing, but have humans review exceptions or a random sample of outputs. This ensures that the inevitable errors (which might be 1-5% of cases) don’t derail the operation. Yes, the AI agent might still occasionally stumble on an unusual case, but those are caught by design. Meanwhile, you’re benefiting from the vast majority of cases it handles correctly.

Prepare for continuous improvement, not one-and-done. Embracing scalable task automation means accepting that AI agents will be an ongoing work-in-progress in your operations. There will be updates, new versions, and tweaks needed as they improve (remember, the tech is evolving fast). Build processes to capture error cases and feed them back into model improvements or re-training. Make it a cycle: the AI automates at scale, humans handle the edge cases and provide feedback, which then helps the next iteration of the AI handle more of those cases. Over time, the portion of work the AI can do reliably will grow – but you’ll always have a safety net for the rest. This approach both maximizes value and minimizes disruption.

By focusing on task-level automation at scale, enterprises can achieve significant ROI without the unrealistic requirement of flawless AI. Success lies in integration, not substitution—blending AI agents into the workforce such that humans and machines each do what they’re best at. Companies that master this synergy will gain tremendous efficiency, all while maintaining control over quality and outcomes.

Risk Management in Agentic AI

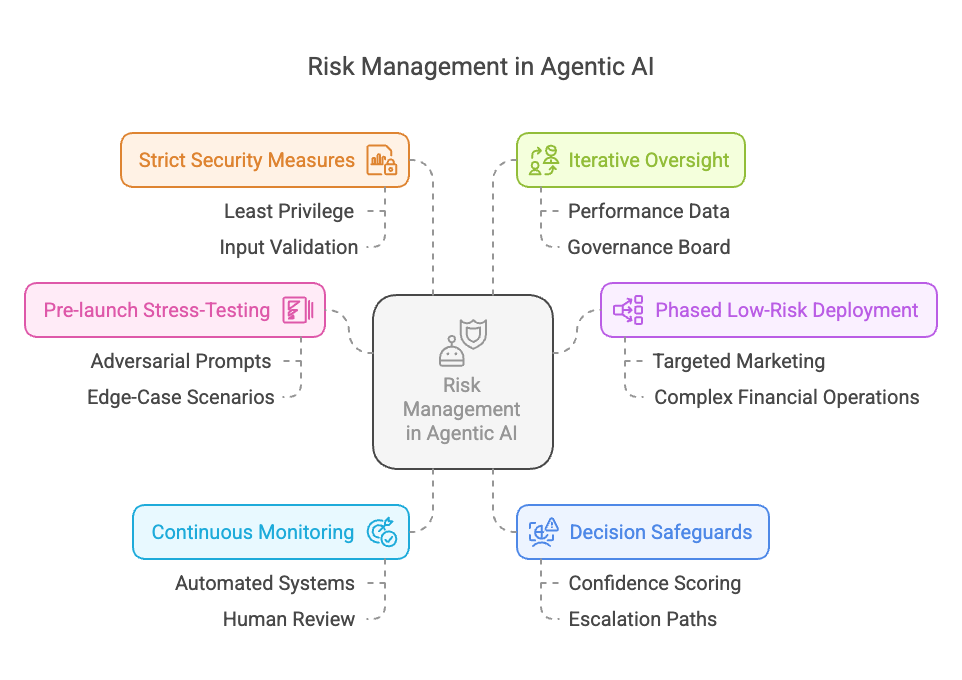

Agentic AI can deliver transformative benefits, but it also introduces risks that AI leaders must actively manage. These include “hallucinations,” where the AI confidently generates false or misleading information; bad decisions caused by flawed data or goals; inconsistent outputs that erode user trust; potential security vulnerabilities; and downstream disruptions in critical workflows. If left unaddressed, these can harm customers, undermine compliance, and damage brand reputation. Below is a practical, approach to uncover, monitor, and mitigate these risks.

Pre-launch stress-testing

Begin by stress-testing your agent in a safe environment. Challenge it with adversarial prompts, malformed data, and edge-case scenarios to pinpoint potential failure modes—like contradictory reasoning or logical loops. For example, if the agent will handle financial transactions, see how it reacts to extreme market volatility or ambiguous user requests. Document each shortcoming and refine the system accordingly. This up-front effort prevents costly surprises when the AI encounters real-world complexity. Think of it as a “red team” exercise: you want to break the AI in-house so you can reinforce it against future breakdowns.

Phased low-risk deployment

As a next step, start applying agentic AI in lower-risk scenarios to limit potential impact. For instance, use it for targeted marketing campaigns or routine sales calls, where missing a lead or providing an imperfect pitch causes minimal damage, before deploying it for complex underwriting or large-scale financial operations that can involve millions of dollars. By gradually scaling from smaller, low-stakes applications to higher-stakes domains, you build organizational confidence in the AI’s reliability and catch hidden pitfalls early. This phased approach prevents catastrophic missteps and ensures that the system matures before taking on critical responsibilities.

Continuous monitoring

Once deployed, vigilant monitoring is essential. Set up automated systems to track the agent’s actions and detect anomalies—such as spikes in errors, unusual response times, or drastic shifts in behavior. Log all outputs and decisions for troubleshooting and audits. Alongside automation, maintain a human-in-the-loop review process for sensitive tasks (e.g., financial approvals, regulatory compliance). People can catch subtler issues like tone inconsistencies or borderline policy violations that metrics alone might miss. By combining automated alerts and human judgment, you ensure the AI’s outputs remain aligned with organizational goals and ethical standards.

Decision safeguards

Agents sometimes generate bogus content or skewed recommendations. To counter this, use confidence scoring or self-checking mechanisms. If the AI’s confidence falls below a threshold, direct the task to human oversight or a simpler rule-based fallback. For instance, a customer support agent could escalate unusual refund requests for manual review. Clearly define escalation paths: if the AI identifies a high-risk scenario, it must flag a supervisor before taking action. These guardrails ensure that when the model “hallucinates” or becomes uncertain, it does not proceed unchecked.

Strict security measures

Agentic AI can act with autonomy, so limit its privileges to only what it truly needs. Apply the principle of least privilege—whether it’s reading databases or performing system commands. Implement input validation to thwart adversarial hacks and prompt injection attempts. In regulated industries like finance or healthcare, embed compliance checks into the AI’s workflow. For example, an insurance claims agent might block decisions violating local regulations. Keep detailed logs of the agent’s activities and regularly audit them. If the AI has to handle private user data, ensure it follows standards such as GDPR or HIPAA. By treating agentic AI as a high-risk system with rigorous checks, you reduce the chance of unauthorized actions or data misuse.

Iterative oversight

Risk management is an ongoing effort. Collect performance data—error trends, user complaints, near-miss incidents—and feed it back into model updates or prompt adjustments. Frequent check-ins help detect model drift, where accuracy erodes over time. Consider forming a governance board or AI oversight committee that reviews the AI’s outputs, logs, and ethical implications. This board can also define guidelines around transparency and fairness, ensuring the AI’s evolution remains aligned with organizational values. Good governance fosters trust among stakeholders, reinforcing that safety and reliability are top priorities.

By combining these six recommendations, AI leaders can confidently deploy agentic AI without exposing their organizations to unacceptable risk. While no system is ever risk-free, a proactive, methodical approach to managing AI failure modes helps capture agentic AI’s benefits while protecting customers, business teams, technology infrastructures, and even your career as an AI leader.

Conclusion

Investing in agentic AI now is not just an opportunity—it’s a necessity. As the technology rapidly evolves, waiting for it to become “perfect” only ensures missed chances and a steeper climb when adoption becomes inevitable. Companies that move early gain invaluable experience in fine-tuning their structures, data pipelines, and workflows, creating a competitive advantage that late adopters will struggle to replicate. Meanwhile, as AI reshapes industry benchmarks in efficiency, personalization, and innovation, those who delay will find themselves scrambling to keep up.

However, successful adoption requires a balanced approach. Rather than attempting to replace entire roles, organizations should focus on task-level automation, targeting high-volume, rules-based processes where even “imperfect” AI can deliver immediate returns. This strategy allows businesses to integrate AI effectively while maintaining human oversight for complex or strategic decisions. Establishing continuous feedback loops—monitoring AI performance, collecting error data, and refining collaboration between humans and machines—ensures that as technology advances, the organization remains agile and prepared to evolve.

At the same time, proactive risk management is essential to harness AI’s power without exposing the organization to unnecessary risks. Phased deployments, thorough stress-testing, and clear decision safeguards help mitigate AI’s occasional errors, such as “hallucinations” or logical missteps. In high-stakes areas like financial approvals or regulatory compliance, maintaining a human-in-the-loop review process provides a critical safety net. By embedding strong governance structures and security protocols from the start, companies can confidently scale AI adoption without jeopardizing legal or reputational standing.

Ultimately, the benefits of agentic AI—greater productivity, enhanced decision-making, and new creative solutions—far outweigh its current limitations. Organizations that take decisive but measured steps today will be well-positioned to capitalize on AI’s increasing reasoning and autonomy. Those that hesitate risk falling behind, struggling to adapt as AI transitions from a competitive advantage to an operational necessity.